Online Learner AI Chatbot Assistants as Sense-Givers

There is more to developing an AI chatbot assistant than filling it with content and fluency.

“Central Library-Card Catalog-1954” by Providence Public Library via Flickr.com, CC BY-NC-ND 2.0”

I recently attended the 2025 “AI In Action” conference for Los Angeles Pacific University which included a live demonstration of the Nectir AI chatbot – an integrated AI chatbot for assisting online learners directly from within the LMS. So far, there have been three frontiers for the development of AI-empowered learner assistant chatbots:

Instructure and Anthology are developing integrated AI-based learning assistants into their Canvas and Blackboard LMS, respectively.

Nectir AI and Khanmigo are third-party applications that can be integrated into an LMS through the LTI open protocol.

A handful of university IT departments are developing their own AI-based learning assistants (see Appendix A).

It may seem obvious to any educator that training and tuning an AI chatbot to assist online learners is inherently a good thing – how could it not be? The context of this problem space, however, is more complex than what meets the eye: What is the theoretical framework that guides optimal implementation from the learner’s perspective? How would a learner know how to use one, given the wide variety of needs that emerge in online education? Which needs should we develop AI chatbots for? Should we install AI chatbots for some needs but not others?

A user-based perspective is needed to explain the context of this problem space and why it matters as an epistemic position within the online learning environment. A starting point is to review Dr. Brenda Dervin’s Sensemaking Methodology and the principles of User-based Design. From these, we will construct a framework for AI chatbot implementation according to models of human cognitive movement, sensemaking, co-orientation, and information-seeking behavior.

Sensemaking

Sensemaking is a body of cognitive research related to the process by which humans create meaning from information, experiences, their environment, and their context within complex situations (Cordes, 2020). The contemporary tenets of Sensemaking were developed by Dervin beginning in the early 1970s to advance information science. Information systems at that time were designed according to rational, static schematics of information where users were expected to address the system, ask for what they needed, and "knowledge objects" would be delivered to them. Systems-based design ascribes no particular value to information beyond what a user is capable of asking for. Think about how library card catalogs are organized alphabetically according to author or subject. If the patron already knows the author or the subject, their query may be satisfied by searching the index cards. Otherwise, there is no recourse if the patron does not already know, for example, that the scientific term for the study of the atmosphere is meteorology, not weather. I recall a similar experience looking through the yellow pages phone book for “car repair” to discover that no such category exists – it is under “automobile repair.” Systems-based design provides truthful information, but it is not necessarily useful under dynamic conditions.

Accordingly, Dervin observed that humans do not always behave as systems designers expect them to. Users often lack the requisite knowledge to effectively query the system. She observed that humans are "squiggly," not entirely centered, sometimes chaotic, and situated in a variety of conditions (Dervin, 2003). They engage information systems from an infinite variety of entry points as reflected in their subjective personal history, understandings of reality, prejudices, prior experiences, etc. These variables, in her view, inform the truth value of information according to situation-based conditions where users’ individual needs are constructed. From this epistemological perspective, Dervin created an alternative to systems-based design by establishing the user-based position as the center point for designing information systems. Using the library metaphor once more, the difference between systems-based and user-based design is illustrated in the following two dialogues:

User: Do you have a book on Italian immigration in the late 19th century?

Systems-based librarian’s response: No

User-based librarian’s response: No, but how would that book have helped you answer your questions?

The user-based librarian recognized that the patron entered into the dialog according to a certain prior narrative of cognition that led them to conclude that a particular book would enable them to move forward towards their goal. The user-based response indicated that, while a certain book was not available, there may be other ways to help the patron move forward. We also assume (from a systems-based perspective) that the reason the patron is asking about a certain book is because the subject matter of the book is literally what they are interested in. It is possible, instead, that the patron was not necessarily interested in Italian immigration at all and just wanted to locate pictures of immigrants arriving at Ellis Island.

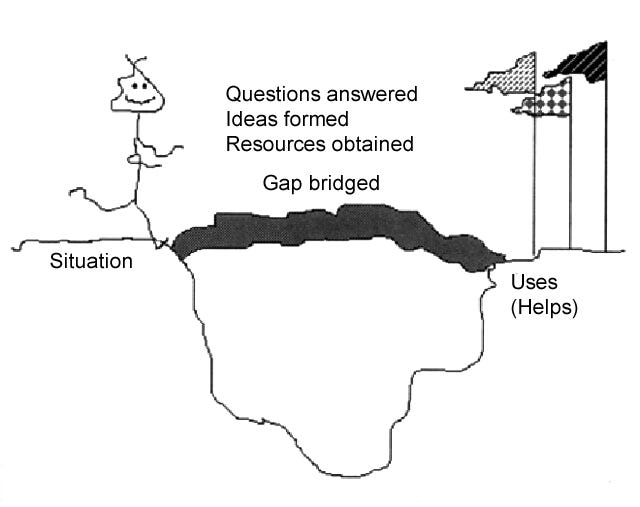

The user-based position necessitates understanding users as active information seekers within a narrative of cognitive movement in time and space. The time-space landscape, however, is "discontinuous" (Carter, 1980): the user encounters gaps in information and social engagement, unexpected events, contradictions, ambiguity, and disruptions that cause their sense to "run out" (Dervin, 1992). These encounters trigger information-seeking behavior to reconstruct one's sense of a situation. In this mode, users benefit from a variety of material, social, emotional, and enabling information that bridges gaps and overcomes stopping points, as shown in Dervin’s Situation-Gap-Use model.

Figure 1: Dervin’s (1992) Situation-Gap-Use Model

Situation: The specific context or moment in time when the individual encounters a stopping point.

Gap: The perceived discontinuity, uncertainty, or lack of knowledge that prevents achieving the goal.

Use: The ways in which information is applied to bridge the gap to continue towards the goal.

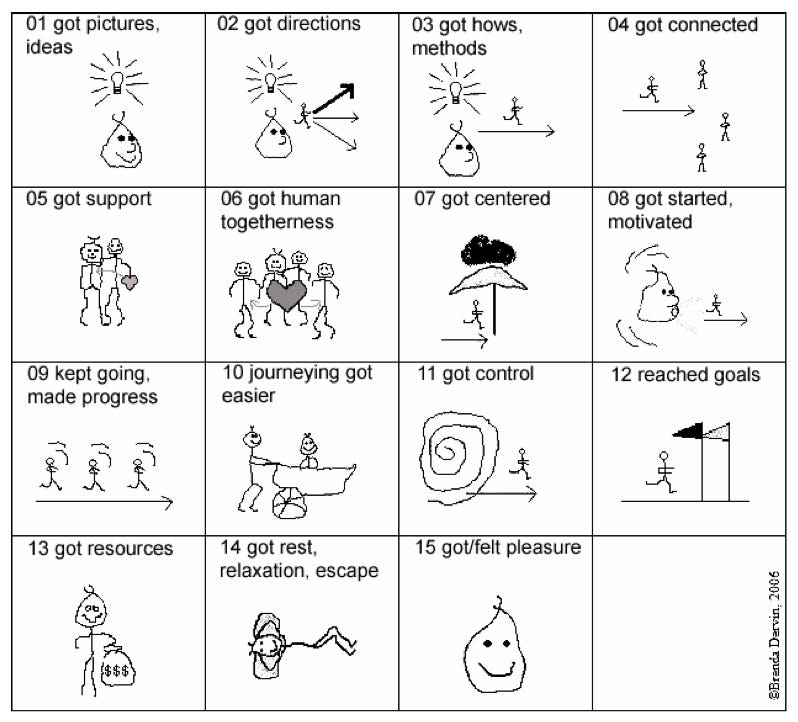

In Figure 2, Dervin (2008) visualizes how users describe themselves as having been helped by a sense-giver.

Figure 2: Dervin’s Helps

The Online Learner as Information Seeker

Online learners are fully immersed in an information environment. Their success is heavily reliant on their ability to independently navigate the LMS, make sense of the learning environment as a concept, and accurately interpret all forms of communication therein. The learner's experience of online education is particularly "gappy" because of the psychological effects of being physically distant from the instructor (Moore, 1993) and from the loss of semantic richness in asynchronous communication (Daft & Lengel, 1986). This "gappiness" occurs on several concurrent channels of the learner’s experience:

Channel of Uncertainty

Emotional Channel:

"I hardly know this professor other than through Announcements and email."

"I'm not sure I can do all the work for this course while I'm working full-time."

"I'm not sure I belong in this class."

“I must pass this course to graduate.”Orientation Channel:

"I'm not sure what the concept of this online course is."

"Where do I find articles for this assignment?"

"I'm not sure how to approach starting this assignment."

“I'm not sure how to interact with other students in an online discussion.”Technical Channel:

“How do I upload a video into this course?”

“Why won’t my computer launch this application?”

“How do I convert this document into Word format?”Intellectual Channel:

"I'm not sure I understand this concept and I can't just ask the professor when I need to."

"I need someone to explain to me what a Literature Review is."Remediation Channel:

"How do I fix this assignment according to the instructor's feedback?"

When a learner encounters a gap in their sense of a situation, they construct a “problem area” composed of a history of events leading up to the stopping point, a perception of the problem that prevents further progress, a sense of what is needed to overcome the problem, and a sense of what they would do with the information and resources once they had them. With this portfolio in-hand, they approach a sense-giver (a system or person) with a profile of the problem area seeking information to bridge the perceived gap. The sense-giver helps to redefine the problem or constraint so that the individual in the problem area can (re)make sense of their condition and continue to operate towards achieving their goal (Nilan & D’Eredita, 2007).

The Problem of Co-orientation

Fundamentally, the challenge with developing an AI-based support system is bridging the gap between the perception of the problem as presented by the learner and aligning the problem with useful information. This is a function of co-orientation: the system, as sense-giver, needs to comprehend the user’s needs while the user needs to “find themself” in the system, i.e. “Yes, that is my problem. That is where I got stuck!” Humans can co-orient about the nature of problems fairly efficiently since such forms of communication are the basis of our survival as a species. AI applications, though linguistically fluent, are merely prediction systems that respond based on patterns in training data. They do not actually “know,” experientially, what the user is describing as their problem and would not necessarily recognize situations where the learner is misinterpreting their needs, “zero-shot.” An AI-based response is, at best, an estimation. A sense-giving chatbot must be trained to understand that co-orientation is a negotiation more so than it is about aligning input with output. Settling co-orientation is prerequisite to a successful encounter between a sense-maker and a sense-giver.

Emotional Labor and Human Involvement

Developers must also consider that co-orientation around certain stopping points might best be serviced by a human, even if it is possible (and more efficient) to provide the same information from an AI-based chatbot. This is exemplified in the Online Peer Academic Leadership (OPAL) program implemented at the University of New Hampshire – College of Professional Studies Online (CPSO). In the OPAL program, fully asynchronous online courses are enhanced with an additional human “concierge” who serves as an on-demand assistant for learners’ needs, but not in the areas of instruction, feedback, or subject matter expertise. The concierge (referred to as an OPAL), is a student recruited from a prior section of the same selected course to provide learners with help conceptualizing an assignment, navigating the course, and locating academic and support resources. Having been successful in completing the same course as the learners they now serve, the OPAL has a closer connection with the learner’s actual narrative of learning, inclusive of the emotional, orientation, technical, intellectual, and remediation issues that emerge. The emotional camaraderie provided by the OPAL also bypasses the power differential between the instructor and learner, which can inhibit help-seeking engagement.

While it is conceivable that an AI-based solution could provide learners with similar assistance for all channels of uncertainty, there are dimensions of the OPAL project that align with emotional labor – a segment of service-oriented professional work managing emotional expressions with customers. From the institution’s perspective, the OPAL relationship with learners reinforces a commitment to personalized service, warmth, empathy, and human connection while enhancing the College’s brand reputation - all valuable contributors to student retention. The OPAL can de-escalate frustration, lower stress (which impacts information processing), and help learners maintain their composure just by listening to them.

The OPAL case study is not intended to suggest that humans are inherently better at serving learners’ needs than an AI chatbot under all conditions. OPALs are not available 24/7, nor does any OPAL know how to support learners in every situation. Rather, if the greatest challenge of providing sense-giving support to online learners is co-orientation, then perhaps humans ought to serve in the channels of uncertainty where co-orientation is the most difficult.

From a broader perspective, it is unclear whether any non-human AI sense-giving chatbot can entirely overcome the challenge of co-orientation, though there is incremental progress. OpenAI’s Deep Research mode responds to a user’s initial query by asking clarifying questions to refine its focus and scope. Google’s AI Studio can read the user’s screen and respond to questions from the user to explain how to perform a task. While these features aspire towards co-orientation, they remain “zero-shot” at the point of user engagement. Humans will always present situations and needs that non-humans cannot understand. The question is whether the overlap of these long-tail needs against the limitations of an AI sense-giving chatbot reveals too many gaps in the system to provide a reliable value to learners.

User-based Design Challenges

Given the complexity of human cognition and behavior embedded in the semantically thin and stressful online learning environment, developing a user-based AI sense-giving chatbot can present significant design challenges:

How do we train chatbots to comprehend the context of each channel of uncertainty from a user-based perspective unless we know in advance the narrative background of every user’s entry point? How do we gather experiential data that defines the most common patterns of stopping points and when they occur?

How do we train a chatbot to discern users’ perceived gaps (lack of facts, confusion/disorientation, procedural deficits) from actual gaps? How do we account for the possibility that learners are not sure what kind of problem they are experiencing?

Is it even possible to train an AI-based system to comprehend the meaning of all Dervin’s Helps as optimal human states? If so, can it extrapolate from learners’ uncertainties, as presented, to help them achieve the most desirable ends?

If a learner is experiencing several kinds of uncertainty at the same time, which should they address first?

Should we train chatbots to negotiate with typical age college learners differently than with adult learners? Or undergraduate learners differently than graduate learners?

Are there some forms of uncertainty that should only be addressed by humans and not a chatbot, no matter the degree of inefficiency?

In the interim, as we consider implementing AI sense-giving chatbots to serve online learners, we should proactively manage learners’ expectations until optimal sense-giving capabilities have been iterated to maturity.

Conclusion

This article is not an exhaustive investigation of the inner workings of existing AI chatbots nor an assessment of their effectiveness. I defer to the research community to undertake this project. Rather, this is a call for stakeholders to come to consensus about the nature of the problem space and how a revolutionary information technology asserts itself into the information seeking needs of online learners. Developing a user-based AI sense-giving support system should consider the following:

Focus on how, not what: Gather information that explains how users define needs in different situations, how they present those needs to systems, and how they make use of what systems offer them (Dervin & Nilan, 1986). Situation-based needs are stronger predictors of the truth value of information than a collection of objective “answers” or from “knowing” the user, e.g., demographic information, individual interests, and sociological dimensions.

Focus on what users think they need, not on what information is in the system that we think they need: Accept that users are “squiggly” and cannot always query a system sufficiently to convey what they actually need. Needs are constructed according to the constraints of the user’s knowledge and experience up to that point. The system must be able to elicit enough information from the user to capture the prior narrative that led to the stopping point rather than simply answering a question in isolation.

Recognize that the user’s needs may change over time: It is possible that the user may believe that they need a certain resource or form of assistance at the point of initiation and then pivot to a different expression of need as the engagement with the system progresses. Systems need to be adept at co-orienting with the user to settle the actual nature of the problem, which can be muddied by stress. Note that the value of certain forms of information or assistance may change according to the stage of the user’s journey in the problem area (in this case, a college course). Conceptual assistance, for example, may be more important at the outset of a project than at the middle or end.

Humans improve their condition through communication. The design of information systems should borrow from the metaphor of human conversation to co-orient on problems and streamline learners’ ability to engage with support systems effectively.

References

Carter, R. F. (1980). “Discontinuity and communication,” paper presented at the East-West Institute on communication theory from Eastern and Western perspectives, Honolulu, HI.

Cordes, R. J. (2020). Making sense of sensemaking: What it is and what it means for pandemic research-Atlantic Council. atlanticcouncil. org [Internet], 18.

D’Eredita, M.A., Nilan, M.S. (2007). Conceptualizing Virtual Collaborative Work. In: Crowston, K., Sieber, S., Wynn, E. (eds) Virtuality and Virtualization. IFIP International Federation for Information Processing, vol 236. Springer, Boston, MA. https://doi.org/10.1007/978-0-387-73025-7_4

Daft, R. L., R. H. Lengel (1986). Organizational information requirements, media richness and structural design. Management Science. 32(5) 554–571.

Dervin, B. and Nilan, M. (1986). Information needs and uses. Annual Review of Information Science and Technology (ARIST). Volume 21.

Dervin, B. (1992). From the mind’s eye of the user: The Sense-Making qualitative-quantitative methodology. In J. D. Glazier & R. R. Powell (Eds.), Qualitative research in information management (pp. 61-84). Englewood, CO: Libraries Unlimited. Reprinted in: B. Dervin & L. Foreman-Wernet (with E. Lauterbach) (Eds.). (2003). Sense-Making Methodology reader: Selected writings of Brenda Dervin (pp. 269-292). Cresskill, NJ: Hampton Press. © Hampton Press and Brenda Dervin (2003), reprinted by permission of Jack D. Glazier (1992).

Dervin, B. (2003). Sense-Making’s journey from metatheory to methodology to method: An example using information seeking and use as research focus. In B. Dervin & L. Foreman-Wernet (with E. Lauterbach) (Eds.). (2003). Sense-Making Methodology reader: Selected writings of Brenda Dervin (pp. 133-164). Cresskill, NJ: Hampton Press.

Dervin, B. (2008, July). Interviewing as dialectical practice: Sense-Making Methodology as exemplar. In International Association for Media and Communication Research (IAMCR) 2008 Annual Meeting, July (pp. 20-25).

Moore, M. G. (1993). Theory of transactional distance. in D. Keegan (ed.), Theoretical principles of distance education (p.22-38). London: routledge.

Appendix A – Universities developing their own learner assistant chatbots

Grand Valley State University - AI chatbot developed at Seidman enhances learners’ understanding of software. “An AI chatbot created by a Grand Valley faculty member and staff member is helping learners in the Seidman College of Business master key software that is implemented by businesses and government agencies around the world.”

Digital Marketing Institute - 8 Universities Leveraging AI to Drive Learner Success.

EdTech Focus on Higher Education - How Three Universities Developed Their Chatbots. “Universities are using AI to build bots that tutor learners, answer queries about campus life and offer secure access to large language models.”

Inside HigherEd - Universities Build Their Own ChatGPT-like Tools. “As concerns mount over the ethical and intellectual property implications of AI tools, universities are launching their own chatbots for faculty and learners.”

MatrixFlows - University AI Chatbots That Boost Learner Success 40%.

University of Hawai’i at Hilo - UH Hilo business learners develop AI chatbot to assist with academic advising. “The goal of the federally-funded summer project was for the learner researchers to develop an AI-based chatbot assistant that could provide personalized advice and support to business learners.”

The Return of Jill Watson – “Georgia Tech’s Design Intelligence Laboratory and NSF’s National AI Institute for Adult Learning and Online Education have developed a new version of the virtual teaching assistant named Jill Watson that uses OpenAI’s ChatGPT, performs better that OpenAI’s Assistant service, enhances teaching and social presence, and correlates with improvement in learner grades. Insofar as we know, this is the first time a Chatbot has been shown to improve teaching presence in online education for adult learners.”

University of Michigan - MiMaizey In Depth. “MiMaizey is a powerful AI assistant tailored for the University of Michigan learner community, designed to enrich daily learner life with personalized support. Whether you need information about dining options, class materials, learner organizations, or transportation, MiMaizey has you covered.”

University of Texas at Austin - UT Sage. “UT Sage is a virtual instructional designer and AI Tutor designed by UT Austin experts to support the responsible use of generative AI in teaching and learning. Instructors can use UT Sage to create interactive chatbots that enhance learner engagement and customize learning experiences. UT Sage allows instructors to design tutor sessions on any topic using established principles of learning experience design, while also ensuring learner education records are protected. AI tutors coach learners in specific topics or lessons related to their coursework.”

University of New Hampshire – DeepThought (Currently in development). “Personal AI Assistant - Converse with DeepThought on any topic. Unrecorded conversations will be lost on close. Conversations you create are saved and will automatically resume.”